THOUGHT LEADERSHIP - Maturing cyber-security into digital-safety

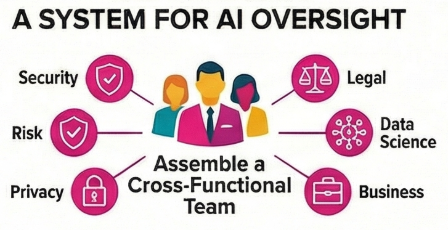

AI adoption is inevitable but requires robust governance for success. Author Bhojraj Parmar argues that poor governance erodes trust; governance should instead foster safety, trust, and prosperity. Rushing AI adoption risks "Shadow AI," data leaks, and insider threats. The blog advocates moving beyond narrow cybersecurity to Digital Safety, prioritizing the safety of humans, institutions, and communities. The core message is that AI governance must align with digital safety modeling to account for all types of harm when defining OKRs/KPIs. This is fundamentally a business problem, not just a cybersecurity one, and strong governance is essential for maintaining customer trust, adoption, and revenue.

Digital Safety Modelling is a structured process for identifying, understanding, and prioritising potential harms to humans arising from their interaction with or dependence upon technological systems. Where threat modelling asks "what could go wrong with this system?", Digital Safety Modelling asks "how could this system's behaviour—intended or otherwise—harm the human?"

![[background image] image of contact center space (for a data analytics and business intelligence)](https://cdn.prod.website-files.com/image-generation-assets/c6108207-eb8f-4df4-bf21-2ada31eda025.avif)