The CEO has just returned from Silicon Valley. He/She is excited and declares that we are going to implement an AI first strategy across the business. She/He exclaims “these are special times, we have an opportunity to be leaders and do right for our customers and stakeholders”. Everyone claps and looks excited and wants to contribute to the adoption of LLM and GenAI solutions across the business. Now your task is to consider how to make it a part of your business’s OKR/KPIs and report on adoption of AI solutions across the business. Change for the better is simply how progress happens.

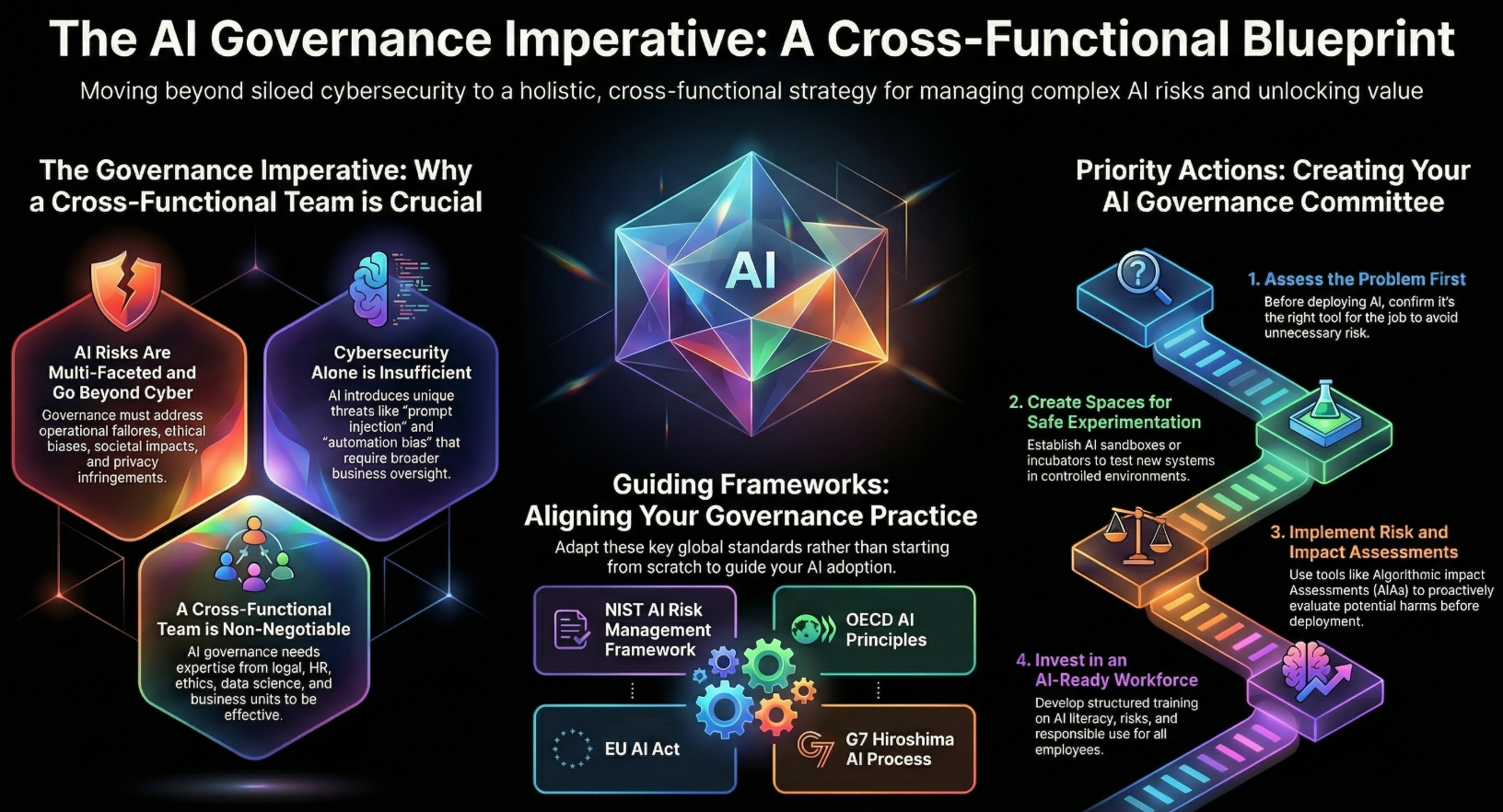

How do you know the change is “for better” and that no one is harmed by the change? It’s not a simple question to answer. A lot of board of directors and executives would ask - how are we governing the change? How can we make sure we keep innovating, changing for better and not harm anyone or the business in the process? Would this impact our customers and their customers? To answer these questions, the key is how a change is governed, monitored and empowered? Governance is not simply about having oversight, skills or managing risk. We believe poor governance erodes trust. Therefore governance is a mechanism to foster safety, trust and prosperity of all stakeholders.

The inevitability of AI empowered solutions has been an accepted reality for a long time. One would argue that we have been using some or other form of intelligent solution since the beginning of digital systems. Although that has dramatically changed since the rise of deep learning and neural networks were commoditized. Not just marked by an emotional moment for humanity when AlphaGo won against masters of an ancient and very complex game Go. AI enabled the practical advancement of Physics and Chemistry in ways that it would go on to help humans win the Nobel Prize in 2024. So there is no argument on the value digital technologies bring to human progress. This is not intended to be a historical write up for why we should use and adopt AI solutions. It is more about the “how” we go about doing it in a way that digital safety principles are applied right from the inception of business value of using AI solutions.

The issue comes to the fore when every shiny AI solution is adopted due to the wit of marketing and sales campaigns or the rush to do it first and not considering how it could bring harm (physical, mental or accumulative) to people. This is where we hear about Shadow AI, data leaks, personal chats with AI bots being exposed, company sensitive data being exposed and insiders stealing data. So when not governed well AI solutions can be a double edged sword.

Let’s face it, cybersecurity is not the first thing that comes to mind when an executive pushes for an OKR or KPI for use of AI across all business lines. Why would it be a consideration? One reason is the friction, the other is the short sighted view of cybersecurity that starts and ends with protection of data and systems.

This is where Digital Safety; prioritising the safety of humans - shines. When safety of individuals, institutions and communities is considered and AI governance is aligned to digital safety modeling approaches then harm (direct, indirect and accumulative) is considered as part of AI Governance in defining OKRs, KPIs and value realisation metrics.

Consider the principle of justice, which goes beyond simply being equitable, fair and inclusive to let's build solutions that consider just outcomes for everyone involved by addressing systemic issues that may prevent it. Let’s break it down to practical shape.

Every organisation considering AI solutions to take advantage of the value LLMs and GenAI solutions offer is doing the right thing. Why hold back from progress and setting trends? And why slow down if it brings more value to your business, customers and stakeholders?

Well, as long as it doesn't cost you with customer’s “Trust”, the answer would always be yes. Replace “AI solutions” to “anything else” and the answer would be still yes if it helps solidify customer trust. Trust drives adoption, revenue and ambassadorship.

So if governance can help us maintain and secure customer trust, there is no holding back in innovation right? As you can see this is hardly a cybersecurity problem, it is a business problem that is tackled much more comprehensively using digital safety lenses.

Erosion is trust is an outcome of poor governance of AI solutions adoption but a well thought out Governance at board of directors and executive level can garner and strengthen that trust.

At Qubit Cyber our mission is to shift focus from simply protecting data and systems (Cybersecurity) to protecting humans in the digital era (Digital Safety) which creates trust in digital systems while keeping humans safe. We will be publishing a series of blogs on AI governance as we tackle some practical issues with our customers across New Zealand. Please feel free to reach out with any feedback or questions.

![[background image] image of contact center space (for a data analytics and business intelligence)](https://cdn.prod.website-files.com/image-generation-assets/c6108207-eb8f-4df4-bf21-2ada31eda025.avif)